Published November 24, 2025 · Updated January 27, 2026

How multimodal AI combines text, images, audio, video, and screen context to power real-world AI workflows in 2026.

A New Interface Layer for Human Intelligence

Multimodal AI is no longer experimental.

In 2026, AI systems can already understand and combine text, images, audio, video, and on-screen context within a single workflow. Instead of interacting with AI through isolated text prompts, users can now communicate using multiple inputs at once — just like humans do.

This shift is fundamentally changing how people write, design, analyze data, conduct research, and interact with software. AI is no longer just responding to instructions; it is interpreting context.

In this guide, we break down the most important multimodal AI tools available today, explain how they work, and show where they already outperform traditional text-only AI systems. You’ll see practical examples, real use cases, and clear explanations of the current limitations you should understand before integrating multimodal AI into your own workflows.

If you’re looking for a practical comparison of tools that are actually usable today, see our curated guide to Best Multimodal AI Tools (2026).

For years, working with AI meant typing prompts into a text box.

It responded.

You adjusted.

You repeated the loop until something useful emerged.

That approach was powerful — but fundamentally limited.

Text-only AI couldn’t see what you were looking at, hear your voice, understand visual layouts, or interpret what was happening on your screen. Every interaction required translation into words.

That era is ending.

Multimodal AI refers to AI systems that can process, interpret, and reason across multiple input types — including text, images, audio, video, screen context, and tool actions — within a single model or coordinated workflow. Instead of forcing humans to adapt to machines, multimodal AI adapts to how humans naturally communicate and work.

This guide is structured to help you quickly understand how multimodal AI works, where it’s already being used, and which tools matter most.

- A New Interface Layer for Human Intelligence

- What Is Multimodal AI?

- What Makes Multimodal AI Truly Different?

- The 2026 Multimodal Landscape: Four Categories That Matter

- Best Multimodal AI Tools in 2026

- How Multimodal AI Changes the Creative Process

- Business & Team Benefits: The Enterprise Shift

- Multimodality + Agents = A New Work Interface

- Real Multimodal Use Cases You’ll See Everywhere in 2026

- Expert Perspective: Why Multimodal AI Is a Structural Shift

- FAQ: Multimodal AI Explained

- Related Reading: Explore Multimodal AI Tools Further

- Conclusion: Multimodal AI Becomes the Default

Before looking at tools and use cases, it’s important to understand what multimodal AI actually is — and how it differs from traditional AI systems.

What Is Multimodal AI?

In 2026, multimodal AI refers to AI models that can process text, images, audio, video, screen context, and tool actions simultaneously within a single system.

Multimodal AI is increasingly used in AI assistants, creative tools, enterprise automation, and autonomous agent systems.

To work effectively with these systems, many professionals rely on guiding frameworks found in the AI Prompt Writing Guide 2026 (structured prompting for multimodal systems) — foundational knowledge that pairs perfectly with multimodal workflows.

This article explores the capabilities, tools, workflows, use cases, and real-world impact of multimodal AI — and why 2026 marks the beginning of a new era in human–machine collaboration.

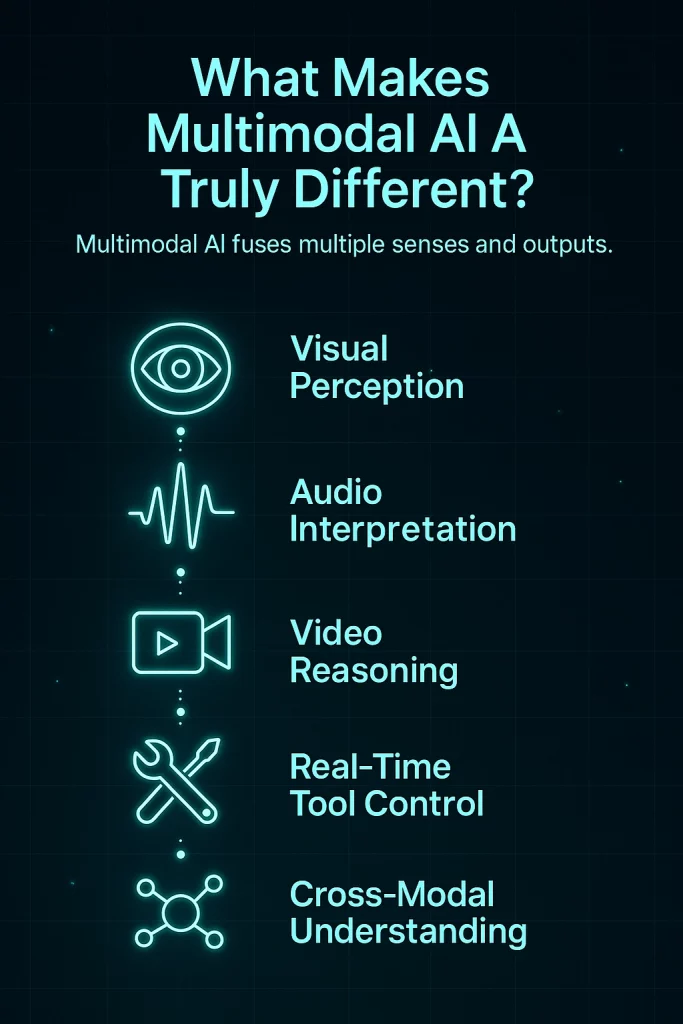

What Makes Multimodal AI Truly Different?

Traditional AI models interpret text and generate text.

Multimodal AI does much more:

- visual perception — understanding images, screenshots, designs, charts

This capability layer is built on image-based multimodal systems that allow AI models to reason across visual input and language simultaneously.

- audio interpretation — interpreting tone, speech, emotion, ambient sound

- video reasoning — following motion, sequences, demonstrations

- spatial understanding — UI layouts, object relationships, screen context

- cross-modal reasoning — combining modalities into unified understanding

- real-time tool control — performing actions across apps and interfaces

This creates AI that understands the world like humans do, but processes information at machine scale.

If you want a structured way to design better prompts for these systems, our breakdown of AI Prompt Frameworks for multimodal reasoning is one of the best foundations to pair with multimodal reasoning.

The 2026 Multimodal Landscape: Four Categories That Matter

Multimodal AI is breaking down into four dominant tool groups.

A. Real-Time Multimodal Assistants

These systems can watch your screen, listen to your voice, understand your documents, and take action across tools.

This evolution is tied directly to the rise of automation agents and autonomous workflows — covered deeply in The Future of AI Workflows.

B. Multimodal Creative Suites

Creative professionals experience the biggest leap.

2026 creative tools can:

- turn a sentence into a full animated scene

- maintain consistent characters and lighting

- generate music aligned to emotional tone

- transform sketches into polished designs

- edit video automatically

- understand mood boards, references, and style cues.

Many of these creative workflows are powered by video-centric multimodal AI tools that combine text, motion, visual reasoning, and timing into a single creative pipeline.

If you want curated inspiration for visual or audio prompting, see our guide on Best Prompt Libraries and Communities.

C. Multimodal Developer Tools

Developers now have AI that understands:

- code

- UI layouts

- architecture diagrams

- video recordings

- workflow demonstrations

They can fix bugs from screenshots, refactor UI from recordings, and translate diagrams into full components.

D. Multimodal Business & Operations Tools

Companies gain enormous leverage when AI can interpret:

- dashboards

- Excel sheets

- PDFs

- audio instructions

- UI contexts

- long workflows

For leaders deploying AI at scale, our guide How to Use AI for Business in 2026 provides frameworks for real-world adoption.

Best Multimodal AI Tools in 2026

As multimodal capabilities mature, a new generation of tools is defining how creators, analysts, developers, and teams work. These tools combine perception, reasoning, and action — enabling workflows that were impossible just one year ago.

Below is a curated list of the most influential multimodal AI tools of 2026.

For readers who want a short, decision-focused selection of multimodal AI tools that hold up in real workflows, see our editorial comparison of the Best Multimodal AI Tools (2026).

1. OpenAI (GPT-5.2, GPT-Vision, Autonomous Agents)

OpenAI leads the multimodal shift with models that unify:

- image understanding

- screen perception

- video analysis

- real-time reasoning

- multi-step task execution

They power many agent platforms that align perfectly with the structured prompting methods you’ll find in our AI Prompt Frameworks guide.

2. Google Gemini 2.0 Ultra

Gemini is a truly multimodal-first model capable of:

- interpreting audio, images, screenshots, and video

- performing long-context reasoning

- analyzing documents and charts with precision

Excellent for research, analysis, and enterprise automation.

3. Anthropic Claude 3.5 Sonnet / Opus Vision

Claude excels in structured reasoning and now offers:

- high-fidelity image interpretation

- multi-document understanding

- visual analytics

- early-stage video comprehension

A top choice for analysts and strategists — aligning with workflows from How to Use AI for Business in 2026.

4. Microsoft Copilot Studio (Multimodal Agents)

Designed for enterprise teams, Copilot integrates:

- UI navigation

- cross-app execution

- workflow automation

- voice-guided operations

A powerful tool for organizations building AI-driven internal processes.

5. Runway Gen-3 Alpha (Video + Motion Intelligence)

A breakthrough in multimodal video generation:

- storyboard → video

- consistent characters

- realistic motion paths

- cinematic camera logic

Ideal for creators upgrading their video workflows.

6. Pika 2.0

A fast, creator-friendly video tool with:

- natural language editing

- motion transformation

- lip-sync intelligence

- reference-driven scene generation

Great for fast iteration cycles.

7. Midjourney V7 + Video

Midjourney is evolving into multimodality, offering:

- image + text workflows

- expanding video features

- style-consistent narrative generation

A core tool for high-end visuals.

8. ElevenLabs Multimodal

A major upgrade introducing:

- emotional voice acting

- voice-to-video alignment

- real-time dubbing

- multimodal studio pipelines

Perfect for narration-heavy workflows.

9. Luma Dream Machine

A frontier model for 3D → video synthesis:

- physical scene reasoning

- realistic camera paths

- advanced object interactions

Powerful for filmmakers and technical creators.

10. NVIDIA Omniverse AI Agents

For industrial, simulation, and robotics workflows:

- multimodal sensor fusion

- physics-based reasoning

- simulation-to-reality pipelines

A category-defining tool for engineering teams.

Multimodal AI tools don’t exist in isolation.

They are part of a broader AI tools ecosystem where text models, image generators, video systems, agents, and automation platforms increasingly work together.

This ecosystem view is explored further in our practical comparison of the Best Multimodal AI Tools (2026), where tools are evaluated based on workflow fit and real-world readiness.

To explore how multimodal systems connect with other AI capabilities — from creative tools to business platforms and emerging agent frameworks — see the full overview inside the AI tools ecosystem.

This hub maps how modern AI tools fit together, helping you understand not just which tools matter, but how they interact across real-world workflows.

How Multimodal AI Changes the Creative Process

Creators move from idea → friction → production

to

idea → outcome.

Key shifts:

1. Rapid Ideation

Brainstorm with voice, sketches, reference images, or mixed input.

2. Instant Storyboarding

AI generates scenes, motion paths, and visual structure automatically.

3. Automatic Editing Pipelines

Timing, cuts, color, sound — handled in one pass.

4. Voice-Driven Creation

You speak, and AI builds.

5. Aesthetic Intelligence

Multimodal AI understands style, mood, rhythm, and narrative structure.

Business & Team Benefits: The Enterprise Shift

Teams benefit even more than creators.

1. Better Decision-Making

AI understands visuals, reports, charts, and documents.

2. Real Context for Automation

AI sees what’s happening on your screen.

3. Faster Training

AI learns workflows visually — far faster than text input.

4. Automatic Knowledge Capture

Meetings become structured summaries.

5. Cross-Tool Coordination

AI connects insights and executes across apps.

To explore more, the collection inside the AI Guides Hub is your next best step.

Multimodality + Agents = A New Work Interface

AI is no longer reactive — it becomes proactive.

Modern agents can:

- perceive

- plan

- decide

- execute

- evaluate

- improve

It’s the transition from “tell AI what to do”

to

“tell AI the outcome — and it handles the process.”

This is the heart of human–machine collaboration in 2026.

Real Multimodal Use Cases You’ll See Everywhere in 2026

1. Customer Support Copilots

Interpret tone + visuals + process flow.

2. Creative Autopilots

Script → visuals → voice → edit → publish.

3. Visual Inspectors

Analyze spreadsheets, dashboards, PDFs.

4. Meeting Intelligence

Extract decisions, insights, follow-ups.

5. Autonomous Research Analysts

Interpret charts, interviews, videos, layouts.

Expert Perspective: Why Multimodal AI Is a Structural Shift

Multimodal AI is not simply about adding more input types.

The real breakthrough lies in shared representation — models that understand how language, visuals, sound, and actions describe the same underlying intent.

This shift allows AI systems to move beyond reactive responses and actively participate in workflows.

Instead of translating one modality into another, multimodal systems reason across them simultaneously — enabling planning, execution, and evaluation in real-world environments.

This is why multimodal AI scales far beyond chatbots.

It becomes the interface layer for modern work.

FAQ: Multimodal AI Explained

What is multimodal AI?

Multimodal AI refers to AI systems that can process and reason across multiple input types — such as text, images, audio, video, and screen context — within a single model or workflow.

How is multimodal AI different from traditional AI models?

Traditional AI models typically handle one modality at a time, most often text. Multimodal AI combines multiple modalities simultaneously, allowing systems to perceive context, reason across inputs, and take coordinated actions.

Where is multimodal AI used in practice?

In 2026, multimodal AI is used in creative tools, AI assistants, enterprise automation, developer workflows, and autonomous agent systems — anywhere context, perception, and action need to be combined.

Does multimodal AI replace prompt-based interaction?

No. Prompting remains foundational, but multimodal AI extends it. Instead of relying only on text prompts, users can interact through images, voice, screen context, and mixed inputs.

Why does multimodal AI matter for the future of work?

Because it enables AI systems to move beyond reactive responses and actively participate in workflows — supporting planning, execution, and decision-making across tools and environments.

Related Reading: Explore Multimodal AI Tools Further

Multimodal AI doesn’t replace existing tools — it connects them.

If you want to explore how multimodal capabilities translate into concrete platforms, workflows, and categories, these guides provide the next logical steps:

- Best Multimodal AI Tools (2026) — a practical, editorial comparison of tools that are ready for real-world use

- AI Tools Hub — a complete overview of the modern AI tools ecosystem, covering creative, business, automation, and developer platforms

- AI Image Creation Tools — how text-to-image and visual reasoning systems power multimodal workflows

- AI Video Creation Tools — video-centric AI platforms combining text, motion, and visual intelligence

- AI Productivity Tools — how multimodal AI improves focus, output, and knowledge work

- AI Automation Tools — using multimodal inputs to automate workflows across apps and systems

- AI Agents Guide — how multimodal perception enables autonomous agents and task execution

Together, these resources show how multimodal AI moves from capability → tool → workflow — forming the foundation of modern AI-driven work.

Conclusion: Multimodal AI Becomes the Default

By 2026, multimodal AI is no longer a niche upgrade — it becomes the baseline for creativity, productivity, and automation across modern digital work.

Those who combine:

- structured prompting

- multimodal workflows

- agent-based orchestration

- cross-tool execution

unlock an entirely new capability layer — where AI systems don’t just respond, but actively participate in real-world workflows.

To explore how multimodal systems fit into the broader AI tools ecosystem, the AI Tools Hub provides a structured overview of the platforms shaping modern work.

And for those focused on implementation, strategy, and real-world adoption, the AI Guides Hub offers practical frameworks for applying multimodal AI across teams and organizations.

This marks the next evolution in human–machine collaboration — and the foundation for how work will be done going forward.

Pingback: Top 7 Visual AI Tools for Creative Artists - Creative AI Network