Published January 7, 2026 · Updated January 7, 2026

In less than a year, LMArena has evolved from a niche chatbot comparison site into one of the fastest-growing AI startups in the market.

LMArena allows users to blindly compare responses from different large language models, ranking them based on human preference rather than vendor-reported benchmarks — offering a rare, neutral view of real-world model performance.

According to reporting by Reuters, LMArena’s valuation has surged to $1.7 billion following a $150 million funding round, tripling its value in roughly eight months.

At first glance, this looks like another headline in the AI investment boom.

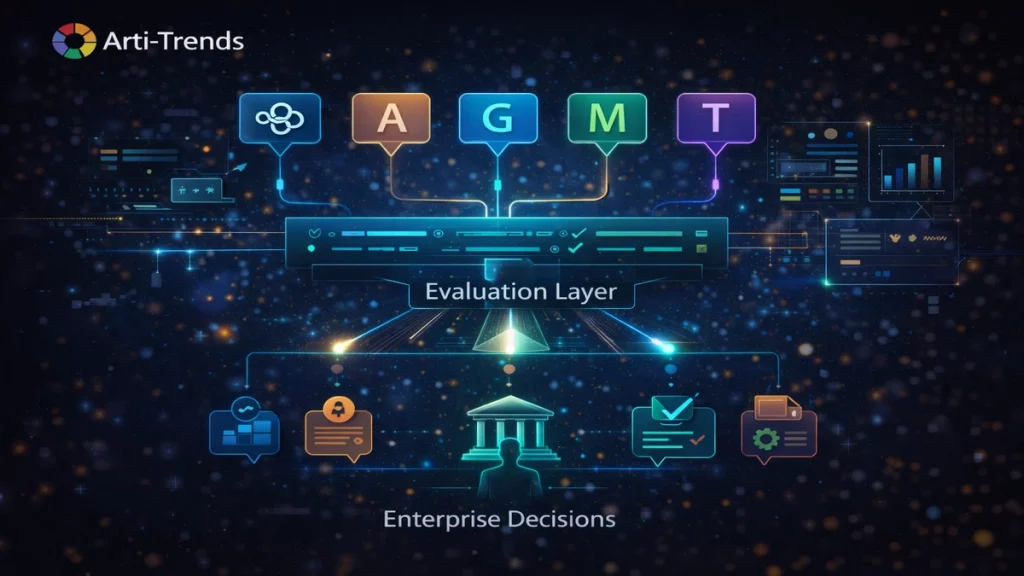

In practice, it signals something more structural: AI evaluation is becoming a core infrastructure layer for large language model adoption.

Key Takeaways

- LMArena reached a $1.7B valuation by focusing on AI evaluation rather than model development.

- Investors are backing infrastructure that helps organizations compare, test, and trust LLMs.

- Benchmarking is shifting from academic metrics to real-world decision support.

- As model performance converges, evaluation platforms gain strategic importance.

- Trust, transparency, and comparability are becoming competitive differentiators in AI.

What LMArena Actually Does — and Why It Matters

LMArena gained early traction by allowing users to blindly compare responses from different AI systems, ranking outputs based on human preference rather than vendor-supplied benchmarks.

This approach addresses a growing enterprise problem:

- performance claims are difficult to verify

- traditional benchmarks are fragmented or outdated

- decision-makers lack neutral comparison tools

As adoption of large language models accelerates, independent and user-centric evaluation has shifted from an academic concern to an operational necessity.

Why Investors Are Betting on AI Evaluation Platforms

The scale and speed of LMArena’s valuation increase reflect a broader change in investor logic.

Rather than concentrating capital solely on:

- new foundation models

- marginal performance improvements

- compute-intensive breakthroughs

Investors are increasingly funding bridging layers — platforms that enable organizations to choose, compare, and deploy AI systems with confidence.

This mirrors earlier technology cycles, where analytics, search ranking, and observability tools became critical once underlying platforms matured.

Benchmarking as Commercial Infrastructure

As model capabilities converge, differentiation increasingly depends on:

- reliability

- consistency across tasks

- safety and alignment

- cost-performance trade-offs

Static leaderboards are no longer sufficient.

Enterprises now need continuous evaluation frameworks that surface AI risks and reliability issues under real usage conditions.

Platforms like LMArena transform benchmarking into an ongoing process rather than a one-time test, making evaluation a monetizable infrastructure layer rather than a research artifact.

What This Means for Enterprises and Developers

For enterprises, AI evaluation platforms change procurement dynamics.

Instead of relying on brand reputation or marketing claims, teams can:

- test models against their own workflows

- compare outputs across providers

- track regressions and improvements over time

For developers and model builders, this introduces sustained competitive pressure:

models are no longer judged in isolation, but side-by-side under continuous scrutiny.

A Signal of Where the AI Market Is Heading

LMArena’s rapid rise highlights a deeper market transition:

- from experimentation → operational deployment

- from demos → procurement decisions

- from novelty → accountability

This shift aligns closely with broader patterns shaping the State of AI as the market matures and organizations demand measurable performance rather than promises.

Why This Matters Going Into 2026

If AI continues to integrate deeper into business and society, trust infrastructure will matter as much as raw model capability.

LMArena’s $1.7B valuation suggests investors believe that:

- AI evaluation will be persistent, not temporary

- neutrality will retain value

- comparison across models will become unavoidable

In that sense, LMArena is not just another AI startup.

It represents the emergence of a new, indispensable layer in the AI stack.

Sources

This article draws on reporting by Reuters, covering LMArena’s funding round and valuation, and places it in the broader context of AI evaluation, benchmarking, and enterprise adoption trends.