Published January 27, 2026 · Updated January 27, 2026

Apple is making one of the most strategic AI decisions in its history — and doing so without fanfare.

Instead of incrementally improving Siri, Apple is rebuilding its voice assistant on top of Google Gemini, Google’s most advanced multimodal AI system.

The goal is not a smarter chatbot.

It is an assistant that can understand context, reason across tasks, and act inside the operating system — across apps, conversations, and time.

If successful, this move quietly resets what digital assistants are for — and what users should expect from them.

Key Takeaway

Apple is rebuilding Siri as a system-level AI agent by integrating Google’s Gemini, shifting the assistant from simple voice commands to context-aware execution across apps and workflows.

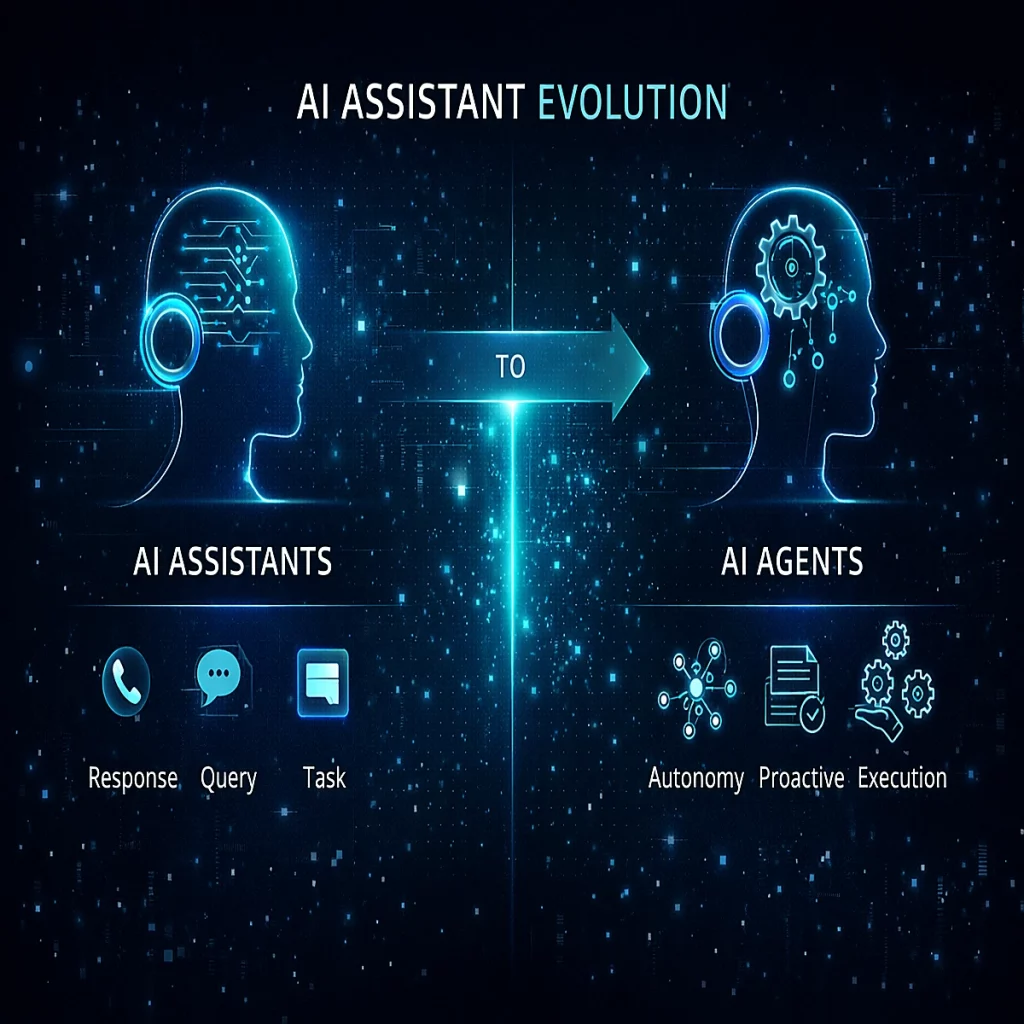

Why Siri Had to Be Rebuilt — Not Upgraded

For years, Siri followed a command-based interaction model:

- One request

- One action

- One response

This worked for simple tasks — setting timers, sending messages, checking the weather — but broke down as soon as users asked for anything involving:

- follow-up questions

- cross-app logic

- summarization

- decision-making

- real-world context

Anyone who has tried to chain multiple actions in Siri knows where it fails: the assistant forgets what you just said.

Gemini changes this foundation.

Instead of reacting to isolated commands, the new Siri is designed to maintain state, understand intent, and reason across inputs — voice, text, app state, and on-screen content.

This shift is powered by multimodal reasoning, where AI systems combine language, visual context, and application state into a single decision-making process

This is not a UI refresh. It is an architectural reset.

Apple’s New Siri Strategy: Two Layers, One Experience

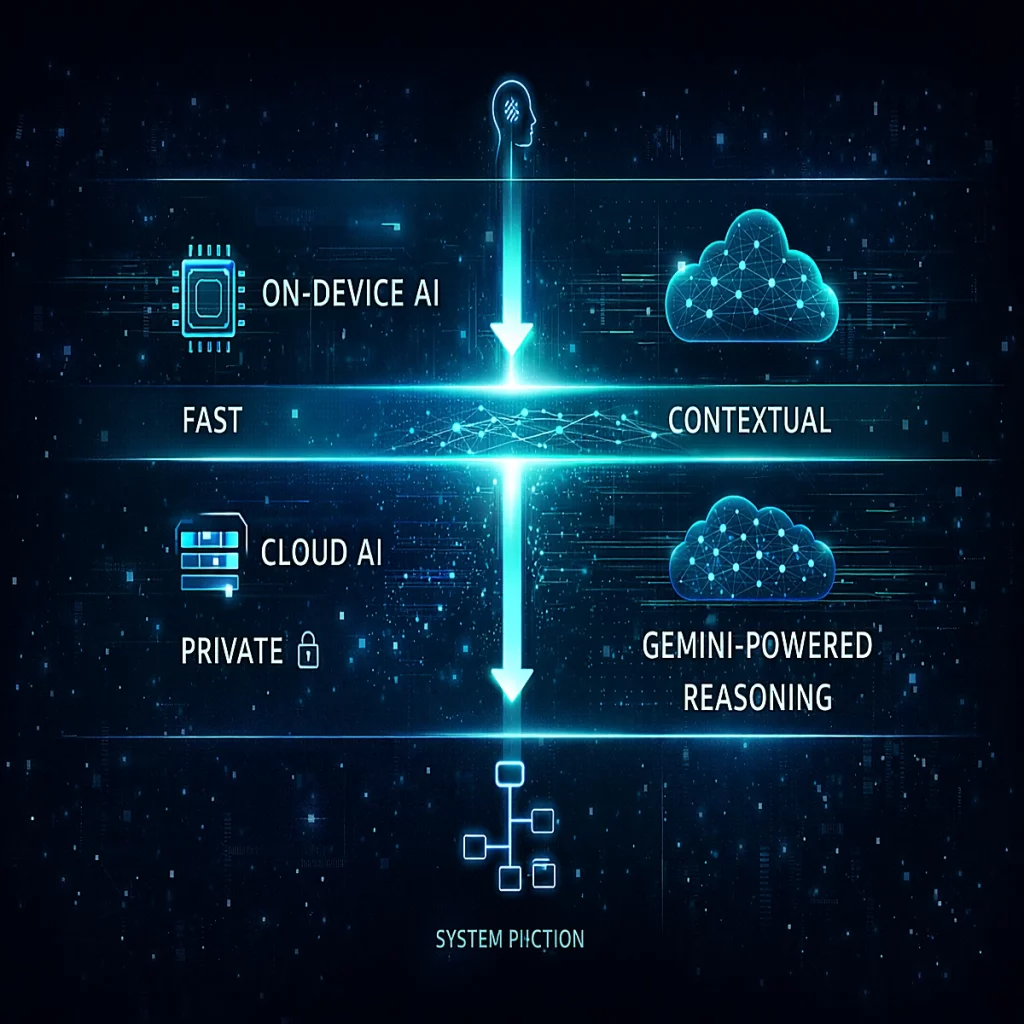

Apple is splitting Siri into two complementary systems — each optimized for a different class of tasks.

Siri Core: Fast, On-Device, Private

The first layer focuses on speed and reliability:

- System controls

- App navigation

- Simple automations

- Offline or low-latency tasks

This version runs primarily on-device and preserves Apple’s privacy-first design philosophy.

Siri Pro: Gemini-Powered, Context-Aware, Reasoning-Driven

The second layer integrates Gemini for advanced intelligence:

- Email and message summarization

- Calendar and task coordination

- Multi-step workflows

- Natural conversation with memory

- Context-aware suggestions

This is where Siri transitions from a voice interface into a general-purpose AI assistant.

This evolution mirrors the rise of AI agents — systems designed to plan, execute, and coordinate tasks across applications with minimal user intervention.

What Actually Changes for iPhone Users

This shift matters only if it changes daily workflows. Here is what users are likely to notice first.

1. Siri Understands Ongoing Tasks

Instead of resetting after each request, Siri can now track intent across steps.

“Summarize my last three emails from finance — and draft a reply confirming the deadline.”

Siri understands:

- which emails matter

- what “the deadline” refers to

- what tone fits the context

2. Cross-App Actions Become Normal

“Add my flight details to tomorrow’s schedule and remind me when I should leave.”

This requires:

- reading confirmations

- updating calendar entries

- factoring location and traffic

- triggering reminders

Previously impossible. Now native.

3. App-Aware Editing and Creation

“Continue where I left off in this document and make it more formal.”

Siri understands:

- the active app

- the current content

- stylistic intent

This is Gemini’s multimodal reasoning applied at OS level.

Old Siri vs New Siri: What’s Fundamentally Different

| Capability | Old Siri | New Siri (Gemini) |

|---|---|---|

| Conversational memory | ❌ None | ✅ Persistent |

| Cross-app workflows | ❌ Limited | ✅ Native |

| Context awareness | ❌ Minimal | ✅ Deep |

| Reasoning & summarization | ❌ Weak | ✅ Strong |

| Privacy control | ⚠️ Mixed | ✅ Explicit |

The key change is not intelligence alone — it is permission to act inside the system.

How Siri Compares to Other AI Assistants

| Feature | New Siri (Gemini) | ChatGPT | Google Assistant |

|---|---|---|---|

| OS-level control | ✅ Full (iOS) | ❌ None | ❌ Limited |

| App-native actions | ✅ Yes | ❌ No | ⚠️ Partial |

| Multimodal reasoning | ✅ Gemini | ✅ GPT-4/5 | ✅ Gemini |

| Context persistence | ✅ Strong | ✅ Strong | ⚠️ Moderate |

| Privacy-first design | ✅ Apple-grade | ⚠️ Account-based | ⚠️ Account-based |

ChatGPT excels at reasoning and explanation.

Siri’s advantage is execution.

Why Apple Chose Gemini Instead of Building Everything In-House

Apple has strong AI research teams — but training frontier models at scale requires:

- massive compute

- constant iteration

- global data infrastructure

By partnering with Google:

- Apple accelerates time-to-market

- Gemini supplies proven multimodal reasoning

- Apple retains control over UX, privacy, and system integration

This mirrors Apple’s historical playbook:

own the experience, partner on infrastructure where it makes sense.

Gemini operates as an intelligence layer — not a visible brand inside iOS.

Privacy and Trust: Where Apple Draws the Line

This integration raises inevitable questions.

Apple’s approach includes:

- Clear separation between on-device and cloud processing

- Explicit opt-in for Gemini-powered features

- Abstracted or anonymized context where possible

- No default access to raw personal data

The message is consistent:

powerful when you allow it, private by default.

Whether users trust that balance will shape adoption.

What This Means for the Future of AI Assistants

The assistant race is no longer about who answers questions best.

It is about who can act on your behalf — safely, accurately, and contextually — inside your digital life.

With Gemini-powered Siri, Apple gains:

- A credible leap in assistant capability

- Stronger iOS ecosystem lock-in

- A response to ChatGPT’s momentum

- A foundation for AI-first system interfaces

If execution matches ambition, this will be the most important Siri update since its launch.

Bottom Line

Apple rebuilding Siri around Google’s Gemini is not about catching up.

It is about redefining what an assistant is allowed to do.

Not just talk.

Not just suggest.

But understand, coordinate, and act — across apps, time, and intent.

That shift matters far more than any single feature.

Sources & References

This article is based on a synthesis of publicly available information, industry reporting, and platform documentation, including:

- Apple — official product announcements, platform documentation, and public statements related to Siri, iOS, and Apple’s AI strategy

- Google — technical overviews and research publications on Google Gemini, including multimodal reasoning capabilities

- Coverage and analysis from established technology publications tracking AI assistant development, operating-system-level AI integration, and large language model deployment

- Independent analysis of AI assistant architectures, multimodal systems, and agent-based workflows conducted by the Arti-Trends News Desk

All interpretations, comparisons, and conclusions are editorial and reflect Arti-Trends’ independent analysis. This article does not contain sponsored content or paid endorsements.