Published November 27, 2025 · Updated January 9, 2026

Neural networks are the invisible engine behind nearly every major breakthrough in modern artificial intelligence. They power the language models that write your emails, the vision systems that detect disease in medical scans, the algorithms that personalize your feeds, and the multimodal assistants that understand text, images, audio, and video in real time.

Yet despite their impact, most people still don’t understand what neural networks actually are — or why they have become the foundation of modern AI. Millions of users interact with systems like ChatGPT, Gemini, and Midjourney every day without knowing how neural networks work, what makes them different from traditional algorithms, or why they outperform older machine-learning methods.

This guide is part of the AI Explained Hub — Arti-Trends’ structured learning center for understanding how artificial intelligence works under the hood. Here, we focus specifically on neural networks: the core technology that enables machines to see, hear, read, reason, and generate content at scale.

In this beginner-friendly explanation, you’ll learn:

- what neural networks are

- how they learn from data

- why deep learning changed everything

- and where these systems appear in real-world AI tools

If you want the broader foundation first, you can start with What Is Artificial Intelligence? or explore the full learning cycle in How Artificial Intelligence Works. From there, neural networks are the next logical layer.

What Neural Networks Really Are

At their core, neural networks are mathematical systems designed to recognize patterns in data.

They are inspired by the structure of the human brain, but they do not think, reason, or feel. Instead, they process numbers through many small computational units called neurons, arranged in layers.

Each layer transforms the input slightly:

- early layers detect simple signals

- deeper layers combine those signals into more complex features

- the final layer produces a prediction

This layered design allows neural networks to learn directly from raw data — without requiring humans to specify what features matter. That is the crucial difference between neural networks and traditional software, which relies on manually written rules.

If you’ve ever wondered what neural networks really are, this hierarchy is the answer:

they extract patterns step by step, turning simple signals into meaningful representations.

The Core Components of a Neural Network

A neural network is built from a small number of fundamental parts that work together to transform data into predictions.

Neurons (Units)

A neuron is a simple mathematical function.

It receives input values, multiplies them by learned weights, adds a bias, and passes the result through an activation function.

On its own, a single neuron does very little.

But when thousands or millions of neurons are connected, they can recognize complex patterns in images, text, sound, and numbers.

Weights and Biases

Weights and biases are the parameters that a neural network learns during training.

- Weights determine how strongly each input influences the output.

- Biases shift the output to improve flexibility and accuracy.

During training, these values are constantly adjusted to reduce prediction errors.

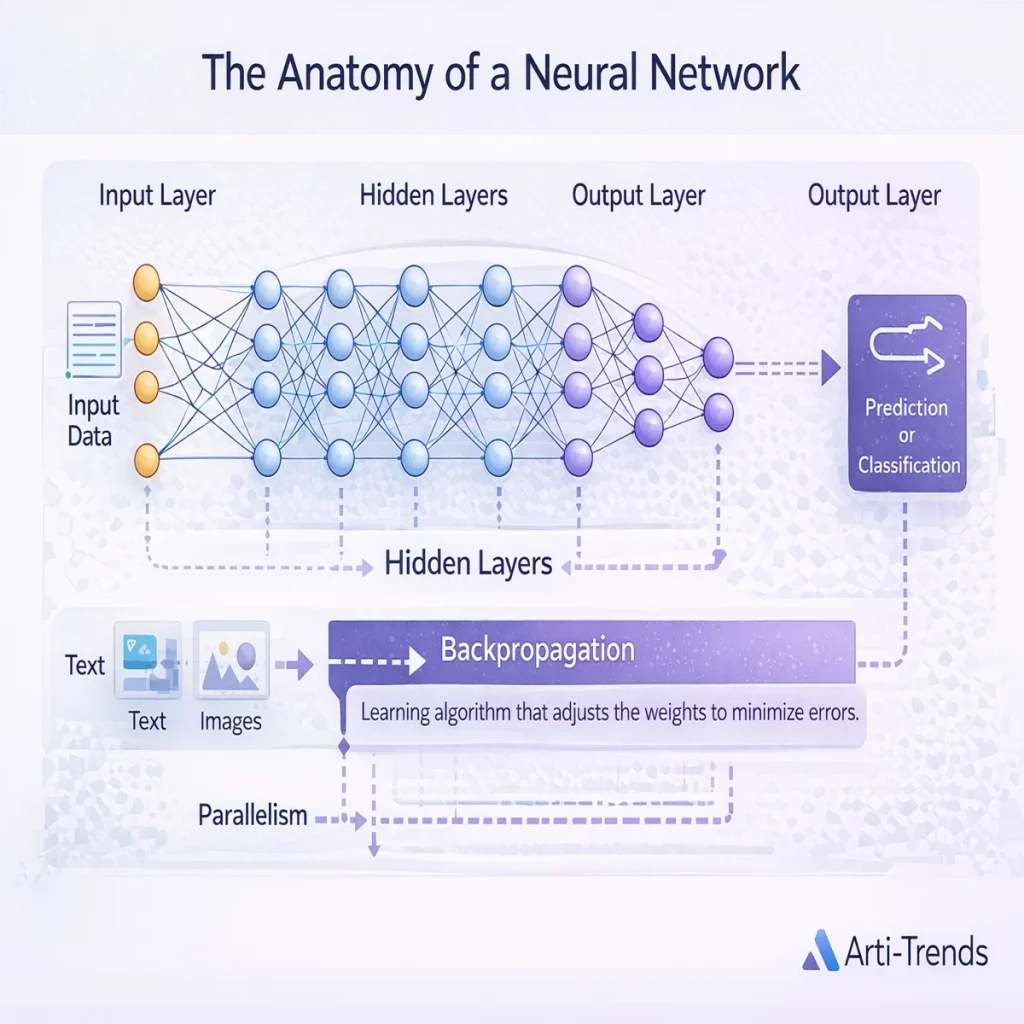

Layers

Modern neural networks are organized into layers:

- an input layer that receives the data

- multiple hidden layers that extract increasingly complex features

- an output layer that produces the final prediction

The deeper the network, the more abstract the patterns it can learn — this is the foundation of deep learning.

How Neural Networks Learn: Step-by-Step

Neural networks do not “understand” in a human sense.

They learn by repeatedly adjusting themselves to make better predictions.

This happens through a structured, iterative process:

Forward pass

The input data flows through the network and produces an initial prediction.

Loss calculation

A loss function measures how far that prediction is from the correct answer.

Backpropagation

The error is sent backward through the network, telling each neuron how it should change.

Gradient descent

An optimization algorithm adjusts the weights and biases in the direction that reduces future error.

This cycle repeats thousands or even millions of times until the network converges on a model that performs well.

For a broader, system-level view of this learning loop, explore How Artificial Intelligence Works.

The Main Types of Neural Networks: A Practical Overview

Different problems require different neural network architectures.

Here are the most important types used in modern AI systems.

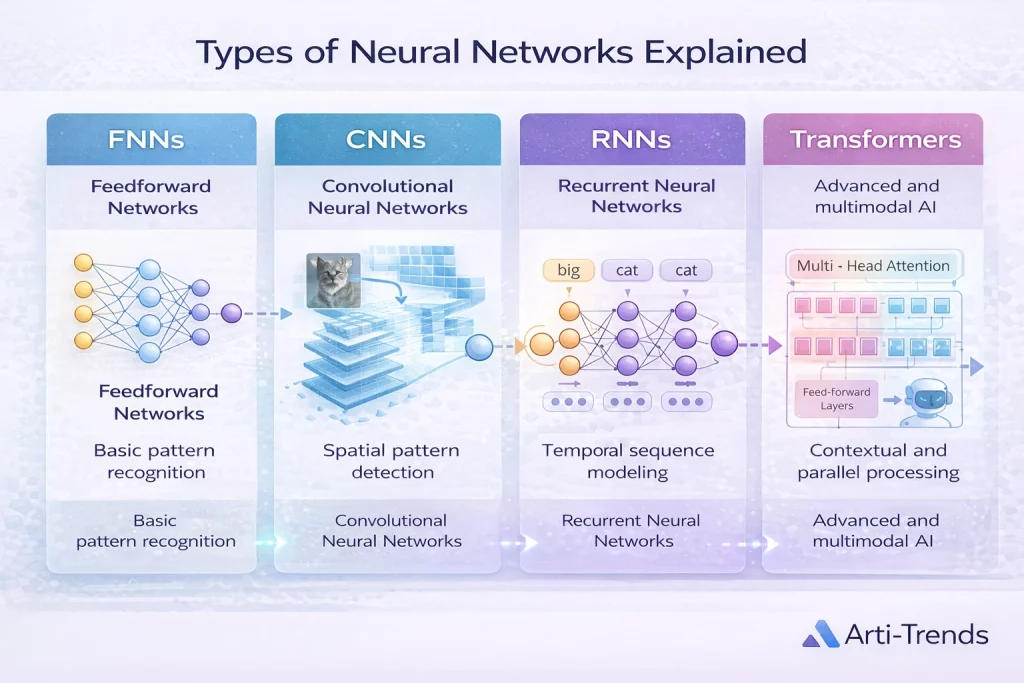

Feedforward Neural Networks (FNNs)

The most basic form of neural network.

Data moves in one direction — from input to output — without loops.

They are commonly used for:

- structured data

- simple classification

- basic regression tasks

Convolutional Neural Networks (CNNs)

CNNs are designed for image and video data.

They specialize in detecting spatial patterns such as:

- edges

- shapes

- textures

- objects

CNNs power many visual AI systems, including:

- medical imaging

- autonomous driving

- face recognition

- smartphone photography

Recurrent Neural Networks (RNNs)

RNNs are designed for sequential data such as:

- text

- speech

- time-series signals

Unlike feedforward networks, RNNs pass information from one step to the next.

This gives them a form of memory, allowing them to model how earlier inputs influence later ones — which is essential for language, audio, and temporal patterns.

Transformers

Transformers are the neural network architecture that powers most of today’s advanced AI systems.

Unlike older sequence models, they can capture long-range relationships in data and process information in parallel, making them far more scalable and powerful.

Transformers drive technologies such as:

- GPT-based language models

- Google Gemini

- Claude

- diffusion-based image generators

- multimodal search systems

- agentic AI workflows

This architecture is the reason modern AI can understand language, images, and context at a level that earlier models could not.

To see how transformers actually work under the hood, explore Transformers Explained.

How Neural Networks Show Up in Real Life

Neural networks power a large share of the AI technology people use every day — often without realizing it.

Smartphones

- real-time photo enhancement

- face unlock

- predictive text

- voice recognition

Business and productivity

- customer segmentation

- fraud detection

- sales forecasting

- AI writing and research tools

- workflow automation

Healthcare

- early tumor detection

- radiology classification

- anomaly detection in MRI and X-ray scans

- predictive health scoring

Across all of these domains, neural networks turn raw data into usable insight.

For more real-world examples across industries, explore How AI Works in Real Life.

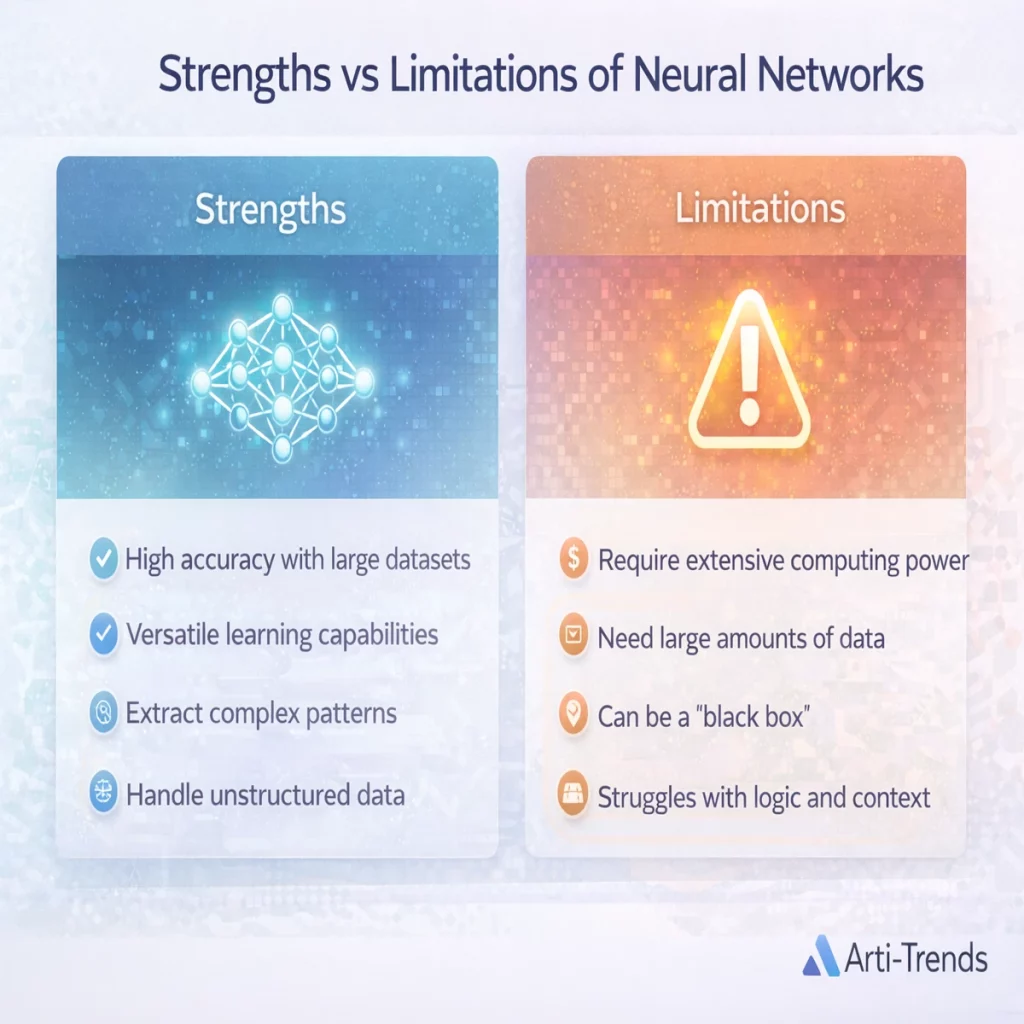

Strengths and Limitations of Neural Networks

Neural networks deliver extraordinary performance — but they also come with important trade-offs.

Strengths

- exceptional performance on unstructured data such as images, audio, and text

- automatic feature learning without manual engineering

- scalable with large datasets and modern GPUs

- foundation for generative and multimodal AI systems

Limitations

- require large amounts of high-quality training data

- difficult to interpret, often functioning as “black boxes”

- sensitive to bias in the data they learn from

- can fail unpredictably in rare or unfamiliar situations

For a deeper, evidence-based exploration of these challenges, see The Limitations and Reliability of Artificial Intelligence.

Neural Networks vs Traditional Machine Learning

Traditional machine learning and neural networks are not interchangeable — they solve different kinds of problems.

Traditional machine learning

- uses human-engineered features

- works well on smaller, structured datasets

- is more interpretable

- is faster and cheaper to train

Neural networks

- learn features automatically from raw data

- excel at perception tasks such as vision, speech, and language

- scale extremely well with more data and compute

- produce state-of-the-art results in modern AI

For a broader conceptual comparison of machine learning and artificial intelligence, see Machine Learning vs Artificial Intelligence.

The Evolution of Neural Networks

Understanding where neural networks came from helps explain where modern AI is going.

Early era

- perceptrons and shallow neural networks

- the discovery of backpropagation

- early attempts at pattern recognition

Deep learning era

- ImageNet breakthroughs in 2012

- convolutional networks dominate image recognition

- recurrent networks advance language modeling

Transformer era

- large language models reach and surpass human benchmarks

- multimodal systems unify text, images, audio, and video

- agentic AI systems begin to emerge

- foundation models reshape entire industries

For a deeper continuation of this timeline, explore Deep Learning Explained.

When Neural Networks Are the Right Tool — and When They’re Not

Neural networks are powerful, but they are not the best solution for every problem.

Use neural networks when:

- the data is high-dimensional

- pattern recognition is required

- tasks involve images, audio, text, or video

- accuracy matters more than interpretability

Avoid neural networks when:

- the dataset is too small

- legal or regulatory explainability is required

- the task is rule-based or deterministic

- compute resources are limited

Conclusion: Why Neural Networks Matter in Modern AI

Neural networks are not just another AI technique — they are the core engine behind nearly every major breakthrough in modern artificial intelligence.

They allow machines to see, hear, read, and recognize patterns at a scale no human system ever could. From medical imaging and language translation to autonomous systems and creative tools, neural networks turn raw data into actionable intelligence.

But understanding neural networks is about more than technology.

It gives you clarity.

When you know how these systems learn, what their strengths are, and where their limits lie, you become better at:

- evaluating AI tools

- trusting results appropriately

- designing workflows

- and avoiding unrealistic expectations

Neural networks don’t think — but they power the systems that increasingly shape how we live, work, and create.

This guide is part of the AI Explained Hub — Arti-Trends’ structured knowledge base for understanding how modern artificial intelligence actually works, layer by layer.

Continue Learning

To deepen your understanding inside the AI Explained cluster, explore:

- What Is Artificial Intelligence? — the full conceptual foundation behind modern AI

- How Artificial Intelligence Works — how models learn, predict, and improve

- Machine Learning vs Artificial Intelligence — where ML and deep learning fit inside AI

- Transformers Explained — the architecture behind modern language and multimodal models

- How AI Works in Real Life — practical examples across industries

For hands-on application, visit the AI Tools Hub.

For guided learning across all AI topics, continue through the AI Guides Hub.