Published November 17, 2025 · Updated January 5, 2026

If you want consistently strong output from AI, you need more than creativity — you need clarity. Most weak results don’t happen because the model is “wrong,” but because the prompt is. Small prompt mistakes may look harmless, yet they have an outsized impact on reasoning quality, structure, and usefulness.

In Part 1 of the Prompt Mastery series, we explored how small refinements dramatically improve output quality in How to Write Better ChatGPT Prompts (with Examples).

In Part 2, we showed how assigning AI a clear professional identity sharpens reasoning and tone in Act as a… Prompts: How Roles Transform AI Output.

In Part 3, we examined the structural thinking behind expert-level prompting in AI Prompt Frameworks Explained: The 4C Model and Beyond.

This article shifts the focus to diagnostics.

Instead of asking how to write better prompts, we ask a more revealing question:

Which prompt mistakes cause the most damage — and how do you fix them instantly?

If you’re new to prompting or want the full end-to-end foundation, start with the cornerstone guide AI Prompt Writing: The Ultimate Guide to Working Smarter (2026).

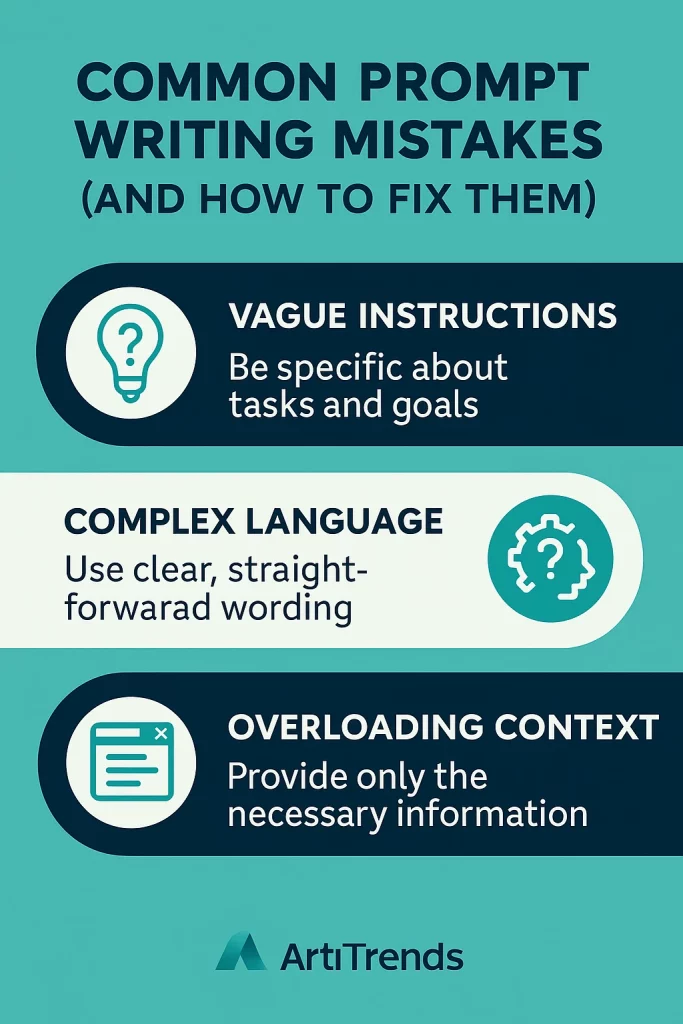

1. Vague Inputs That Force AI to Guess

When prompts are vague or incomplete, the model fills gaps based on probability instead of intention.

That’s why vague prompts lead to generic, shallow, repetitive, or inconsistent answers.

Weak Prompt

“Write a summary of this report.”

Strong Prompt

“Summarize this 12-page marketing report into a 250-word executive brief. Focus on key trends, risks, and actionable insights.”

The difference?

The second prompt adds context, constraints, purpose, and structure — the backbone of high-quality prompting.

To understand how structure shapes output, revisit AI Prompt Frameworks Explained: The 4C Model and Beyond.

What the research says

Recent research reinforces this idea. A 2025 study titled A Taxonomy of Prompt Defects in LLM Systems identifies six categories of prompt failures — including unclear intent, missing constraints, lack of structure, and insufficient context. According to the researchers, most AI prompt mistakes stem not from the model’s limitations but from “structural defects” in the instructions themselves.

This external evidence aligns with what we see in practice: the clearer the intent and the tighter the structure, the stronger and more predictable the output.

Read the full study on arXiv.

The conclusion?

Most AI mistakes don’t originate from the model itself — they stem from structural defects in the prompt.

That’s consistent with what we observe daily at Arti-Trends: when intent is explicit and structure is deliberate, AI behavior becomes far more predictable and reliable.

This predictability emerges once you understand how prompts are architected at a structural level, as explained in AI Prompt Frameworks Explained: The 4C Model and Beyond.

2. Missing Roles That Reduce Expertise

Leaving out a role is one of the most overlooked AI prompt mistakes. If you don’t tell the model who to be, it defaults to a generic assistant — neutral, flavorless, and uncertain.

Weak prompt

“Explain blockchain.”

Strong prompt

“You are a senior fintech lecturer. Explain blockchain to a beginner audience using simple analogies.”

Roles define tone, vocabulary, reasoning depth, and perspective.

This mechanism is explored in detail in Act as a… Prompts: How Roles Transform AI Output.

3. Not Defining the Output Format

AI defaults to plain paragraphs unless you specify a structure. This makes the output harder to scan, compare, or repurpose.

Example

“List the top productivity tools.”

If you want structured clarity, say so:

“List the top productivity tools in a table with columns for features, pricing, and ideal use cases.”

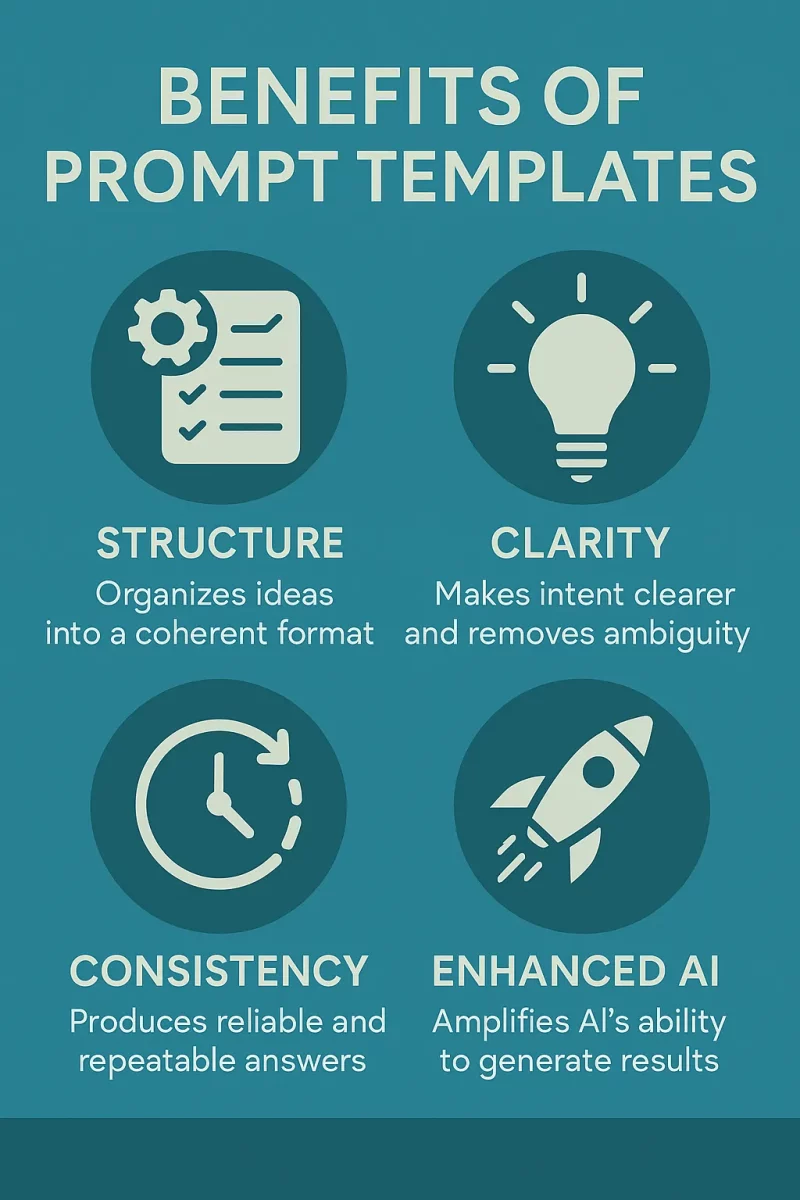

Predefined structures and formats are covered in Prompt Templates for Marketers and Creators, where prompts are designed for consistent, reusable output.

4. Overloading the Prompt With Too Many Tasks

Another common AI prompt mistake is trying to make the model do everything at once.

Overloaded prompt

“Write a product description, brainstorm 10 slogans, outline a marketing plan, and compare competitors.”

Models produce better results when tasks are separated into steps.

Breaking complex tasks into structured reasoning steps is explained in Chain-of-Thought Prompting: Make AI Think Step-by-Step.

5. Not Defining the Goal or Purpose

If you don’t explain what the output is for, AI can’t optimize the depth, tone, or structure.

Example

“Write a paragraph about productivity tools.”

A paragraph for what?

A blog? A sales funnel? A newsletter? A pitch?

Strong version

“Write a 120-word intro for a blog targeting freelancers who want to streamline their workflow.”

Every strong prompt includes a destination.

6. Treating AI Like a One-Shot Machine

Beginners stop after the first answer. Professionals iterate.

Iteration turns decent output into exceptional output.

Many structured refinement prompts appear in

How to Write Better ChatGPT Prompts (with Examples).

Examples of refinement steps:

- “Rewrite this in a sharper tone.”

- “Add two examples.”

- “Make it 30% more concise.”

- “Improve transitions.”

Iteration is where quality is born.

7. Not Setting Constraints (Letting AI Wander Off)

Constraints are not limitations — they’re precision tools.

Without them, models produce broad, unfocused answers.

Examples of constraints:

- word count

- tone of voice

- audience

- specific required elements

- platform context

8. Asking for Creativity Without Direction

“Be creative” is too vague — it lacks guardrails.

Creativity thrives inside boundaries:

- “Write this in the tone of a TED speaker.”

- “Give it the storytelling rhythm of Seth Godin.”

- “Use vivid imagery and short sentences.”

This reduces one of the most subtle AI prompt mistakes: unclear creative expectations.

9. Not Using Few-Shot Examples

Few-shot prompting multiplies consistency.

If you want AI to mimic a specific tone, structure, or rhythm, show it what “good” looks like.

This technique is explored in depth in Few-Shot vs Zero-Shot Prompting: When to Use Which, including when examples outperform instructions.

Example

“Here are two example headlines I like. Create five more in the same style.”

AI learns patterns from patterns.

10. Not Evaluating Output Against Your Strategy

Even perfect prompts can produce imperfect answers.

You must evaluate whether the output follows:

- your tone

- your goals

- your accuracy needs

- your brand strategy

For benchmarking, testing, and refining prompt output at scale, see Top AI Prompt Tools to Boost Productivity in 2026.

Conclusion: Fixing AI Prompt Mistakes Makes You a Better Thinker

Fixing these issues doesn’t just improve AI output — it sharpens your thinking.

You:

- communicate more precisely

- think more structurally

- collaborate more effectively with AI

- get predictable, high-quality results

To deepen your mastery, continue with the full Prompt Mastery series or revisit the cornerstone article:

AI Prompt Writing: The Ultimate Guide to Working Smarter (2026).

The better your prompts, the smarter your AI becomes.

For a complete overview of all prompt guides, frameworks, templates, and use cases, visit the AI Prompts Hub.

Related Reading from the Prompt Cluster

If you want to go deeper into specific techniques and use cases, these guides expand on the core ideas in this article:

- AI Prompt Writing Guide 2026 — The complete foundation for modern prompting and structured AI collaboration.

- How to Write Better ChatGPT Prompts (with Examples) — Practical, copy-and-paste prompts that show how small wording changes transform output quality.

- AI Prompt Frameworks Explained: The 4C Model and Beyond — Structured models that make your prompts more consistent, scalable, and teachable.

- Act as a… Prompts: How Roles Transform AI Output — How role assignment upgrades AI from generic assistant to specialist expert.

- Prompt Templates for Marketers and Creators — Ready-to-use prompt blueprints for SEO, campaigns, social content, and storytelling.

- AI Prompt Mistakes: What Most Users Get Wrong (and How to Fix It) — A field guide to diagnosing vague, inconsistent prompts and turning them into high-signal instructions.

- Top AI Prompt Tools to Boost Productivity in 2026 — The tools, optimizers, and testing platforms that help you refine and scale your prompts.

- Best Prompt Libraries & Communities for AI Creators — Curated places to learn from other creators, share your best prompts, and stay ahead of new patterns.

- Multimodal AI Tools 2026: The Next Evolution of Human-Machine Collaboration — How prompting expands beyond text into images, audio, video, and real-time interaction.

- The Future of AI Workflows: From Prompts to Autonomous Systems — A strategic look at how prompts evolve into agents, workflows, and fully autonomous systems.