Published November 20, 2025 · Updated January 6, 2026

How to unlock deeper reasoning, clearer logic, and more accurate AI output.

Artificial intelligence has reached a point where raw model power is no longer the main differentiator. The advantage now comes from how well you guide the model’s reasoning — especially on complex tasks where accuracy and logic matter.

Chain-of-Thought prompting is one of the most effective ways to do that. Instead of asking for a final answer immediately, you guide the model through a structured reasoning path — so the output becomes clearer, more consistent, and easier to validate.

In Part 1 of the Prompt Mastery series, we explored how small refinements can dramatically upgrade results in How to Write Better ChatGPT Prompts (with Examples).

In Part 2, we showed how assigning AI a professional identity sharpens reasoning in Act as a… Prompts: How Roles Transform AI Output.

Now, in Part 3, we dive into Chain-of-Thought prompting: what it is, why it works, when to use it (and when not to), and how to apply it with practical examples.

For the complete foundation of prompt writing, revisit AI Prompt Writing: The Ultimate Guide to Working Smarter (2026).

This guide is part of the Prompt Writing Cluster and connects naturally with:

– Few-Shot vs Zero-Shot Prompting: When to Use Which

– AI Prompt Frameworks Explained: The 4C Model & Beyond

– Common Prompt Writing Mistakes (and How to Fix Them)

– Top AI Prompt Tools to Boost Productivity in 2026

Let’s dive in.

What Is Chain-of-Thought Prompting?

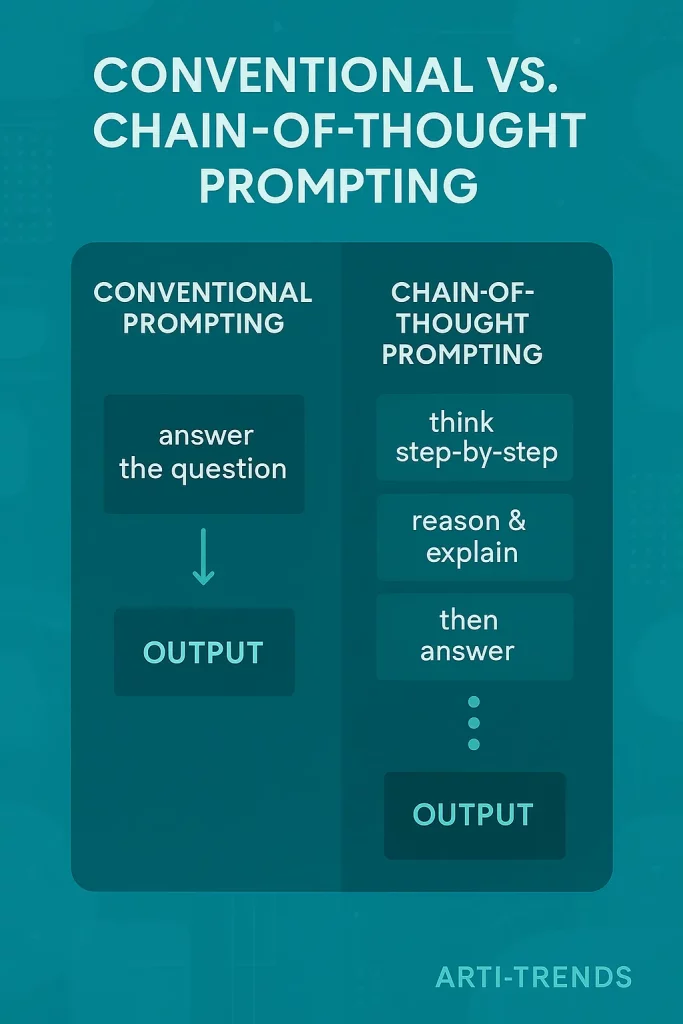

Chain-of-Thought prompting is a technique where you explicitly ask the AI to show its reasoning step-by-step, rather than only giving you the final answer.

Instead of responding with:

“The answer is 42.”

You guide the model to produce:

“Step 1 → Step 2 → Step 3 → Final answer.”

This creates:

- clearer logic

- more accurate output

- fewer hallucinations

- predictable structure

- better reasoning for complex tasks

CoT doesn’t give the model “more intelligence” —

it gives it more structure.

This structural approach builds directly on the principles explained in AI Prompt Frameworks Explained: The 4C Model and Beyond, where prompt clarity and reasoning flow are designed deliberately.

Why Chain-of-Thought Prompting Works

Here’s why CoT is so effective:

A) Large Language Models learn from patterns — not facts

AI doesn’t “know” the answer.

It predicts the next token based on training patterns.

By adding step-by-step reasoning, you give it more tokens to work with, increasing the chance of an accurate final output.

B) Structured reasoning reduces hallucination risk

When the reasoning chain is explicit, the model is less likely to “jump” to a wrong conclusion.

You’re guiding the AI to think more like a human analyst.

This directly addresses one of the most common failure points in prompting, explored in Common Prompt Writing Mistakes (and How to Fix Them) — unclear intent and missing structure.

C) CoT stabilizes answers across model versions

Even newer models (GPT-5, Claude 3.5) can be inconsistent when asked directly.

CoT makes outcomes more uniform and repeatable.

D) It creates transparency

You understand why the model reached its conclusion — critical for:

- decision-making

- compliance

- analysis

- technical work

E) It allows you to correct the model along the way

If a step looks wrong, you can intervene early instead of getting an incorrect final answer.

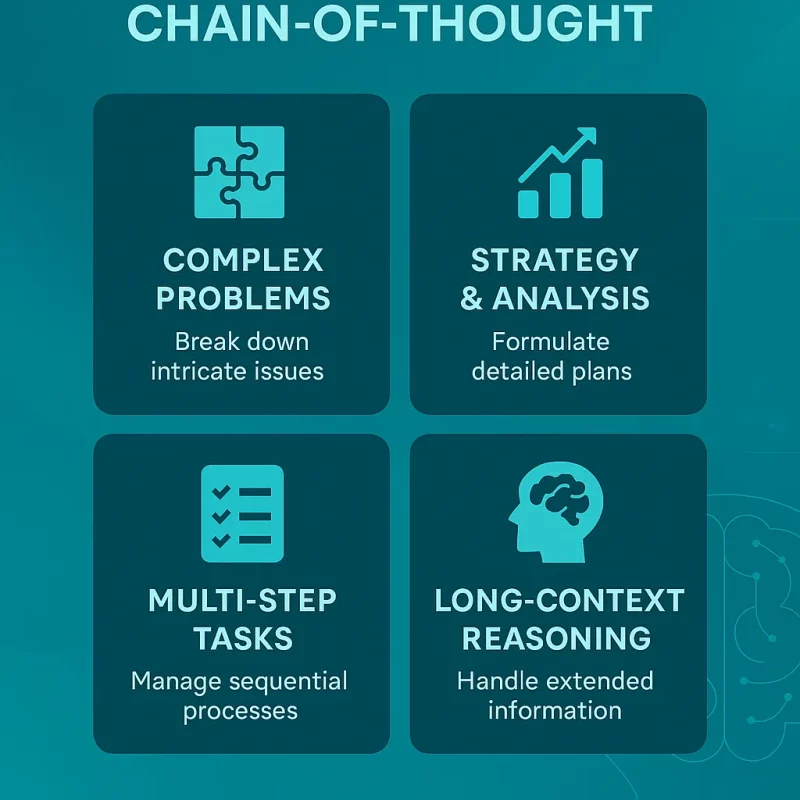

When to Use Chain-of-Thought Prompting

CoT is ideal for any task that involves:

✔ Multi-step reasoning

Strategy, analysis, diagnosis, planning, evaluation.

✔ Math, logic, or problem-solving

(Large models still struggle without structured thinking.)

✔ Research & synthesis

Summaries, insights, extracting meaning from documents.

✔ Decision-making

Pros/cons, ranking, frameworks, trade-offs.

✔ Business workflows

Marketing plans, financial breakdowns, product roadmaps.

✔ Coding & debugging

Walking step-by-step through a code issue.

If your task requires thinking, CoT helps the model think more reliably.

When NOT to Use Chain-of-Thought Prompting

CoT is powerful — but not always appropriate.

❌ Short-form content (tweets, captions)

CoT overcomplicates simple tasks.

❌ Creative writing

It removes spontaneity and makes output robotic.

❌ Sensitive or confidential outputs

Some models hide reasoning for safety reasons.

❌ Tasks where speed > accuracy

CoT slows down inference time.

❌ When you want the AI to be creative, not logical

You don’t want step-by-step reasoning for poetry.

Use CoT strategically — not universally.

Chain-of-Thought Prompting Examples

Example 1 — Business Decision-Making

Prompt (with CoT):

“Evaluate whether we should launch Product X in Q2. Think step-by-step: assess market demand, competition, internal readiness, risks, and expected ROI.”

Result:

A structured 5-part analysis instead of a shallow yes/no.

Example 2 — Coding & Debugging

Prompt:

“Find the bug in this code. Explain your reasoning step-by-step before suggesting a fix.”

Result:

The model reveals how it analyzes the code, making errors easier to catch.

Example 3 — Strategy

Prompt:

“Create a step-by-step plan to expand our SaaS product into European markets. Break down each step with reasoning.”

Result:

A detailed expansion roadmap instead of a vague list.

Example 4 — Learning & Knowledge Tasks

Prompt:

“Explain reinforcement learning in a step-by-step Chain-of-Thought format.”

Result:

The model builds explanations layer by layer.

Example 5 — Comparisons

Prompt:

“Compare Notion AI and Jasper step-by-step: strengths, weaknesses, ideal users, and pricing.”

Result:

A structured, thorough comparison.

How Chain-of-Thought Relates to Few-Shot Prompting

These two techniques work extremely well together, as shown in Few-Shot vs Zero-Shot Prompting: When to Use Which, where examples teach structure and CoT teaches reasoning.

Zero-shot → Direct answer

Good for simple tasks.

Few-shot → Example-driven output

Good for structure and tone.

Chain-of-Thought → Reasoning

Good for accuracy and logic.

But the magic happens when you combine them:

👉 Few-shot examples that include Chain-of-Thought reasoning produce the most consistent and accurate results across all advanced prompting techniques.

For example:

Few-shot CoT Prompt:

“Here’s how to solve this type of problem, step-by-step…”

→ AI repeats the reasoning pattern.

This hybrid method is perfect for strategy, analysis, coding, operations, and complex business tasks.

A Simple CoT Template You Can Use Right Away

Here’s a reusable template you can modify for any situation:

Chain-of-Thought Master Template

“Let’s solve this step-by-step.

First, restate the problem.

Then break your reasoning into clear steps.

Explain your logic at each stage.

End with a concise final answer or recommendation.”

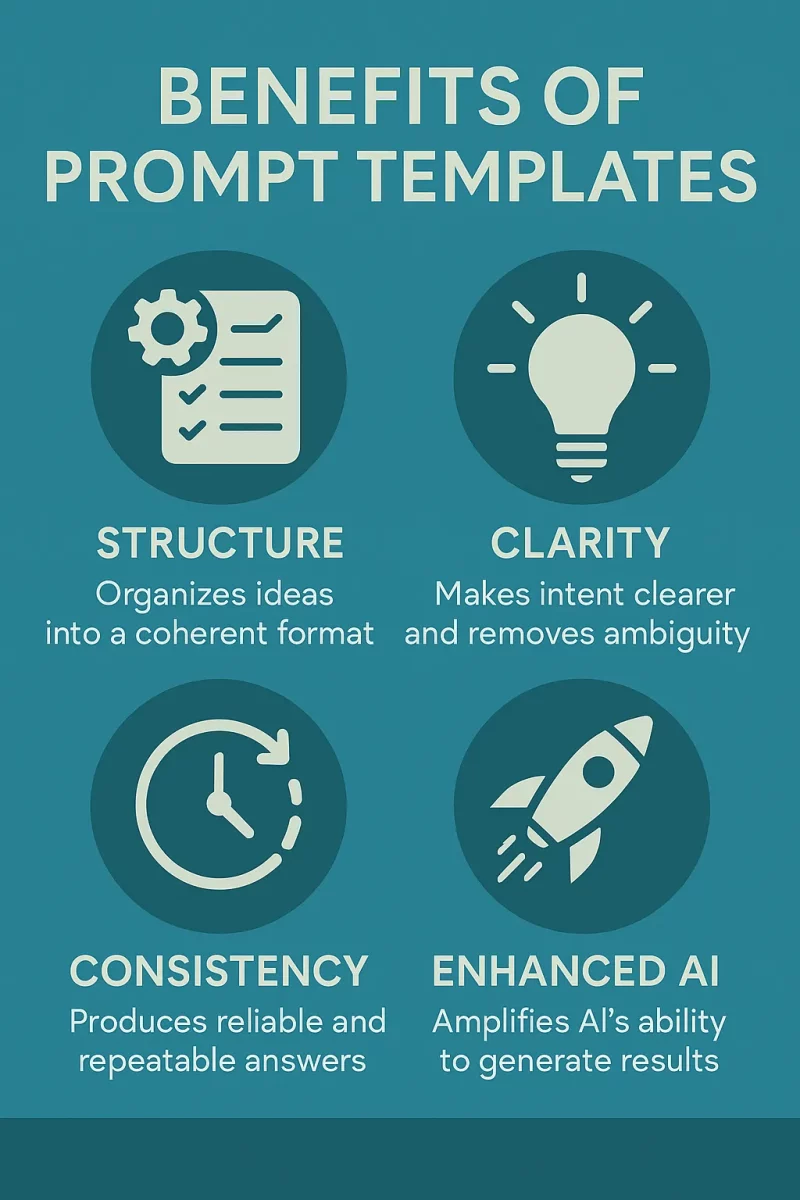

If you prefer reusable structures instead of one-off prompts, this template pairs naturally with the systems described in Prompt Templates for Marketers & Creators.

Want something more advanced?

Chain-of-Thought + Framework Template

**“Think step-by-step using a structured approach.

Use this reasoning flow:

- Context

- Variables

- Constraints

- Options

- Evaluation

- Final decision

Explain each step clearly before you deliver your final recommendation.”**

This method plays well with all other techniques in your cluster, including:

- 4C Framework (Context → Constraints → Criteria → Command)

- Role prompts

- Few-shot examples

- Prompt templates

- AI Tools (Claude, PromptPerfect, Prompt Studio, Jasper)

Limitations of Chain-of-Thought Prompting

CoT is powerful, but:

1. It can hallucinate with more confidence

If the model’s reasoning goes wrong early, the entire chain collapses.

2. It increases token usage

This has cost implications.

3. Some models hide internal reasoning

For safety and security reasons.

4. Step-by-step doesn’t mean correct

Structure ≠ accuracy unless you guide it well.

5. CoT does not replace validation

Always verify factual outputs.

Best Tools for Chain-of-Thought Prompting (2026)

CoT works best inside tools that support complex reasoning workflows:

✔ Claude Workflows

Exceptional for long-context reasoning and analysis.

✔ PromptPerfect

Optimizes your CoT prompts for clarity and accuracy.

✔ OpenAI Prompt Studio

Builds and tests structured reasoning flows.

✔ TypingMind

Great for saving CoT patterns + managing long projects.

✔ GitHub Copilot

Uses step-by-step explanations for code analysis.

If you want a broader overview, read

Top AI Prompt Tools to Boost Productivity in 2026.

In strategic, operational, and decision-making environments, Chain-of-Thought prompting becomes especially powerful when embedded into structured workflows — a practical application explored in AI Prompts for Business & Strategy.

Conclusion

Chain-of-Thought prompting is one of the most powerful techniques in modern prompt engineering. It doesn’t make AI smarter — it makes reasoning visible, structured, and correctable.

By guiding models step-by-step, you gain clearer logic, fewer hallucinations, and more predictable outcomes. This is essential when accuracy, transparency, and decision quality matter.

The real power emerges when Chain-of-Thought is combined with clear roles, structured frameworks, and few-shot examples. At that point, AI stops behaving like a reactive assistant and starts functioning as a reasoning partner you can trust.

For a complete overview of all prompt techniques, frameworks, templates, and real-world applications, visit the AI Prompts Hub.

To apply Chain-of-Thought inside strategic, operational, and decision-making workflows, continue with AI Prompts for Business & Strategy.

And to understand how reasoning-based prompting evolves into agentic and autonomous systems, explore The Future of AI Workflows: From Prompts to Autonomous Systems.

Related Reading from the Prompt Cluster

If you want to deepen your understanding of structured prompting and AI reasoning, these guides expand on the core ideas in this article:

- AI Prompt Writing Guide 2026 — The complete foundation for modern prompting and structured AI collaboration.

- How to Write Better ChatGPT Prompts (with Examples) — Practical, copy-and-paste prompts that show how small wording changes dramatically improve output quality.

- AI Prompt Frameworks Explained: The 4C Model and Beyond — Learn how professional frameworks create clarity, reduce ambiguity, and improve reasoning consistency.

- Act as a… Prompts: How Roles Transform AI Output — How assigning AI a professional role sharpens reasoning, tone, and domain expertise.

- Few-Shot vs Zero-Shot Prompting: When to Use Which — Understand how examples teach structure, patterns, and reasoning alignment.

- Prompt Templates for Marketers and Creators — Ready-to-use prompt blueprints that combine structure, constraints, and reasoning patterns.

- Common Prompt Writing Mistakes (and How to Fix Them) — A diagnostic guide to identifying weak prompts and correcting structural flaws.

- Top AI Prompt Tools to Boost Productivity in 2026 — Platforms and tools that support structured reasoning, testing, and prompt iteration.

- The Future of AI Workflows: From Prompts to Autonomous Systems — A strategic look at how structured prompting evolves into agentic and autonomous AI workflows.